For nearly 10 years now, BGP has been the dynamic routing protocol of choice for data center networks – from deployments as small as just a few racks, to hundreds of racks. It is a mature protocol, having been used to route the Internet for decades, that most Engineers have at least a basic understanding of how it operates.

In this post, I will cover a topic I believe should be considered for green- or brown-field deployments of a BGP based spine/leaf L3 fabric; MP-, or Multiprotocol, BGP – focusing on using IPv6 Link-Local addresses for peering.

One of the first things that needs to be addressed (pun intended) when designing a data center deployment or refresh is the address scheme. If you are building a Layer 3 spine/leaf ECMP fabric, you need to plan for how you will be addressing the point-to-point Layer 3 links in your fabric. You can obviously just plan to allocate a /22 or smaller or larger prefix to allocate /31s between spine and leaf nodes. However, another alternative is to use IPv6 link-local addresses. This way, you are not eating up finite resources needlessly for your underlay fabric. Paired with MP-BGP, you can use this IPv6 link-local peering to carry both IPv4 and IPv6 address-families (in a future blog post I will write about using an IPv6 link-local underlay to build an IPv6 EVPN overlay to provide VXLAN tunneling). Using this method will increase your scale and simplify your deployment, as you are not having to worry about planning for underlay fabric addressing.

Let’s dig in to how this works. I will be using Arista vEOS for the lab, but these capabilities are fairly standard across the various vendors.

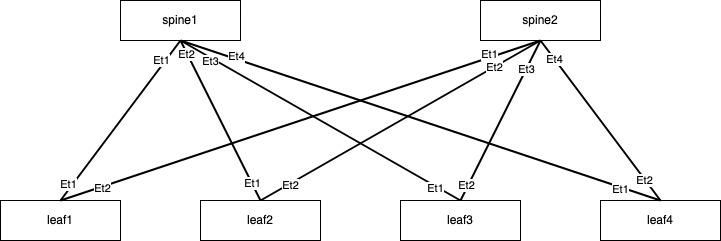

First, here’s what our lab looks like:

A straight forward 6 node spine/leaf fabric with 2 spine switches, and 4 leaf switches – all interconnected redundantly.

The first step we need to take, is to set the ports in our fabric to routed ports, and then enable IPv6 – this will generate a link-local address derived from the physical address of the port:

spine1#conf t

spine1(config)#int Et1

spine1(config-if-Et1)#no switchport

spine1(config-if-Et1)#ipv6 enable

spine1(config-if-Et1)#show active

interface Ethernet1

no switchport

ipv6 enable

spine1(config-if-Et1)#Ensure all the ports interconnecting the fabric are configured in this same way. After, you can confirm all your interfaces have IPv6 link-local addresses:

spine1#show ipv6 interface brief

Interface Status MTU IPv6 Address Addr State Addr Source

---------- ------- ------ ---------------------------- ------------ -----------

Et1 up 1500 fe80::5200:ff:fed7:ee0b/64 up link local

Et2 up 1500 fe80::5200:ff:fed7:ee0b/64 up link local

Et3 up 1500 fe80::5200:ff:fed7:ee0b/64 up link local

Et4 up 1500 fe80::5200:ff:fed7:ee0b/64 up link local

spine1#Now that we have all of our interfaces setup and configured with IPv6 link-local addresses, we can move on to setting up our MP-BGP peerings. While we can statically configure these peerings, I am going to be using Peer Discovery to dynamically set up our peering relationships for ease of configuration. Each vendor has their nuances with dynamic peer discovery, so I recommend consulting the documentation for whichever NOS you are using. I am using Arista vEOS in these examples.

First thing we’ll do is set up a Loopback interface. We’ll use this later for EVPN peering:

spine1(config)#interface Loopback0

spine1(config-if-Lo0)#ipv6 address 2001:db8:1::1/64

spine1(config-if-Lo0)#Now we’ll set up a prefix-list and a route-map for controlling our route advertisements. We’ll also set up a peer-filter for our dynamic peer discovery:

ipv6 prefix-list loopbacks_ipv6

seq 10 permit 2001:db8::/32 le 64

!

route-map leaf permit 10

match ipv6 address prefix-list loopbacks_ipv6

!

route-map leaf deny 999

!

peer-filter leaf

10 match as-range 65001-65099 result accept

!After this we need to ensure we have IP and IPv6 Unicast routing enabling. We’ll turn on an additional nob enabling IPv4 forwarding over IPv6 interfaces:

!

ip routing ipv6 interfaces

ipv6 unicast-routing

!Similar configurations, with minor adjustments, can be deployed across the fabric. Obviously you’ll need to change the Loopback addresses and each switch. Use a scheme that makes sense for you, just be consistent and you’ll thank yourself later.

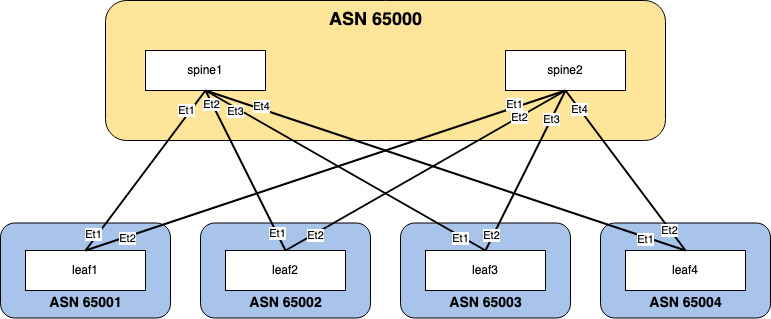

Finally we’re ready to start enabling BGP across the fabric and establish our peerings. Here is what the BGP topology in my lab looks like:

I’m using ASN 65000 for the spine layer, and incrementing ASNs for the leaf switches – starting with 65001 for leaf1, 65002 for leaf2, etc, etc.

Let’s look at the BGP configuration for our spine switches, which looks slightly different than our leaf switches:

router bgp 65000

router-id 10.255.255.1

bgp default ipv4-unicast transport ipv6

neighbor leaf peer group

neighbor leaf route-map leaf in

neighbor leaf route-map leaf out

neighbor interface Et1-4 peer-group leaf peer-filter leaf

!

address-family ipv4

neighbor leaf activate

neighbor leaf next-hop address-family ipv6 originate

!

address-family ipv6

neighbor leaf activate

network 2001:db8:1::/64

!We’re setting a router-id, since there isn’t any interfaces with an IPv4 address currently configured, we’ll set that manually. We’re enabling IPv4 unicast transport over IPv6. We’re setting up a peer group named leaf. And finally, the good bit – the dynamic peer discovery for IPv6 link-local addresses. This is done with the neighbor interface command. We’re setting which interfaces to use to build dynamic IPv6 link-local peers, attaching it to our peer group, and using the peer-filter we set earlier to set our range of acceptable leaf ASNs. Afterwards, we need to activate our peer group in the IPv4 and IPv6 address-families. In the IPv6 AFI, we’re also adding our Loopback network to BGP for the purposes of advertising it across the fabric for EVPN peering, which will come later. The additional next-hop command for IPv4 is to allow us to use an IPv6 address as the next-hop address for any IPv4 routes that will be advertised.

Now let’s look at the configuration from leaf1:

router bgp 65001

router-id 10.255.255.11

bgp default ipv4-unicast transport ipv6

maximum-paths 32

neighbor spine peer group

neighbor spine route-map spine in

neighbor spine route-map spine out

neighbor interface Et1-2 peer-group spine remote-as 65000

!

address-family ipv4

neighbor spine activate

neighbor spine next-hop address-family ipv6 originate

!

address-family ipv6

neighbor spine activate

network 2001:db8:11:a::/64

network 2001:db8:11:b::/64

!

!More or less similar. A couple of differences, there is no need to use a peer filter – we’re using AS 65000 for both spines. We’re also setting our ECMP maximum-paths setting to 32. In this lab there is really no need for this to be higher than 2. In addition, you’ll notice that we are adding two different networks to our IPv6 address-family. One is for EVPN peerings, and the other we’ll be using as our VXLAN end-point later on as well.

And that’s it. Fairly straight forward. We should now be able to see our BGP peerings:

spine1#show bgp summary

BGP summary information for VRF default

Router identifier 10.255.255.1, local AS number 65000

Neighbor AS Session State AFI/SAFI AFI/SAFI State NLRI Rcd NLRI Acc

--------------------------- ----------- ------------- ----------------------- -------------- ---------- ----------

fe80::5200:ff:fe03:3766%Et2 65002 Established IPv4 Unicast Negotiated 0 0

fe80::5200:ff:fe03:3766%Et2 65002 Established IPv6 Unicast Negotiated 2 2

fe80::5200:ff:fe15:f4e8%Et3 65003 Established IPv4 Unicast Negotiated 0 0

fe80::5200:ff:fe15:f4e8%Et3 65003 Established IPv6 Unicast Negotiated 2 2

fe80::5200:ff:fe72:8b31%Et4 65004 Established IPv4 Unicast Negotiated 0 0

fe80::5200:ff:fe72:8b31%Et4 65004 Established IPv6 Unicast Negotiated 2 2

fe80::5200:ff:fed5:5dc0%Et1 65001 Established IPv4 Unicast Negotiated 0 0

fe80::5200:ff:fed5:5dc0%Et1 65001 Established IPv6 Unicast Negotiated 2 2

spine1#We have a session for each IPv4 Unicast and IPv6 Unicast. We can also confirm that the prefixes we’re learning are installed in our route table:

spine1#show ipv6 route

VRF: default

Displaying 9 of 13 IPv6 routing table entries

Codes: C - connected, S - static, K - kernel, O3 - OSPFv3,

B - Other BGP Routes, A B - BGP Aggregate, R - RIP,

I L1 - IS-IS level 1, I L2 - IS-IS level 2, DH - DHCP,

NG - Nexthop Group Static Route, M - Martian,

DP - Dynamic Policy Route, L - VRF Leaked,

RC - Route Cache Route

C 2001:db8:1::/64 [0/0]

via Loopback0, directly connected

B E 2001:db8:11:a::/64 [200/0]

via fe80::5200:ff:fed5:5dc0, Ethernet1

B E 2001:db8:11:b::/64 [200/0]

via fe80::5200:ff:fed5:5dc0, Ethernet1

B E 2001:db8:12:a::/64 [200/0]

via fe80::5200:ff:fe03:3766, Ethernet2

B E 2001:db8:12:b::/64 [200/0]

via fe80::5200:ff:fe03:3766, Ethernet2

B E 2001:db8:13:a::/64 [200/0]

via fe80::5200:ff:fe15:f4e8, Ethernet3

B E 2001:db8:13:b::/64 [200/0]

via fe80::5200:ff:fe15:f4e8, Ethernet3

B E 2001:db8:14:a::/64 [200/0]

via fe80::5200:ff:fe72:8b31, Ethernet4

B E 2001:db8:14:b::/64 [200/0]

via fe80::5200:ff:fe72:8b31, Ethernet4

spine1#Nice! We have a great start to our spine/leaf fabric. Next post I’ll cover building an IPv6 EVPN Overlay that we can use to provide VXLAN across our fabric.

Josh, Nice post. It is very similar to a course I just developed for Juniper. If you are ever interested in learning more about Juniper, I can get you set up. I’ll have to check up on your other posts. Nicely done. —Kerry